As part of the development for the analysis tool Beaver, prototypes were made to investigate the best ways to handle things like gui design, internationalization, charting integration and so on. A few (deliberately simple) examples of the techniques are shown in the PyQt page for general reference and free download, and the prototypes themselves are shown in the prototypes page.

We now have a working application, which does a lot of the basics already, including searching, filtering, and cross-checking. You can already answer many of the questions given as Beaver's goals. The following notes describe the progress of current development.

Following on from the prototypes, the development started on the gui side of things, including how to tie these functions together into a proper application. Again this concentrates on Python and PyQt, but doesn't (yet) include the matplotlib stuff.

Title page and entry to two main functions

Same again in German

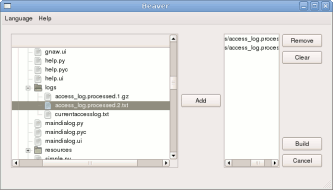

Building a logpile

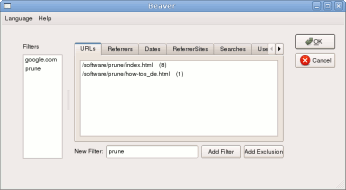

Gnawing a logpile

These screenshots show the first views of how the real gui looks. These dialogs were produced using QtDesigner and the translations were entered with QtLinguist, as described in the pyqt page.

Firstly they demonstrate the multilingual ability, and you can switch language at run-time with the "Language" menu. Currently only two languages are offered (English and German), but it should be straightforward to add more languages if volunteers help with the translations.

For the first step, building a logpile, the gui offers a file tree display, and lets you add single files or whole directories to the list on the right. Once all the log files (either in text format ".txt" or in gzipped text format ".gz") have been added to the list, the "Build" button will start the reading of these files and the creation of the logpile file. Directories are recursively searched for such files.

The second step, shown in the last screenshot, is to "gnaw" the logpile to extract information. The different sets of information are presented in tabs, including information on URLs, referrer URLs, referrer sites, search queries, user agents (human or robot), IP addresses (anonymised) and http codes (200 (OK) or 404 (not found)). Each tab shows a list of the found items and the count, sorted by count.

Note that the IP addresses have been "anonymised" in the logpile, that means that Beaver can't tell you exactly which IP address viewed which page. Instead it just keeps a counter of addresses when building the logpile, so the fourth one it sees is always listed as number "4" for all the page views made by that user. So it lets you follow single viewing paths from page to page without being able to identify the actual users. For example, from any selected search query you can quickly see the entry page to your website and the other pages that user saw before leaving.

In this tiny screenshot, two filters have been added, one to only show elements where the referrer site is "google.com", and the second to only show elements where the search query is "prune". The list in the URLs tab then shows the URLs on this website which resulted from those searches. Both normal filters and exclusion filters are offered.

More screenshots will follow as these functions are built up.

Version 0.2 of the application is now available to download. There may be quirks still and definitely plenty of room for improvement, so it is cautiously called version 0.2 to illustrate the fact that it's just the beginning. Hopefully then after it's released, user comments and feedback will help improve it further.

Since version 0.1, the main changes have been:

The next version 0.3 will hopefully include at least some of the following improvements:

Further development notes and screenshots will be given here as work progresses.